What is Apache Kafka and what are Apache Kafka use cases?

Growing number of companies are implementing software solutions that leverage Big Data, Machine Learning and cloud-native applications. This advanced and difficult part of software development requires experienced, versatile specialists and the use of scalable and resilience tools.

One of such tools is Apache Kafka. It allows you to take up the challenge posed by Big Data, when broker technologies based on other standards have failed.

What is Kafka? How do businesses benefit from its implementation? What to think of before introducing Kafka in your organization and what are possible Apache Kafka use cases? Here’s a simple guide to Apache Kafka for non-techies and developers who wish to get more familiar with it.

What is Apache Kafka?

Kafka originated around 2008 and their authors were: Jay Kreps, Neha Narkhede and Jun Rao who at that time worked at Linkedin. It was a fierce open-source project, now comercialized by Confluent, and used as a fundamental infrastructure by thousands of companies, ranging from AirBNB to Netflix.

Our idea was that instead of focusing on holding piles of data like our relational databases, key-value stores, search indexes, or caches, we would focus on treating data as a continually evolving and ever growing stream, and build a data system — and indeed a data architecture — oriented around that idea.

Jay Kreps — Confluent Co-Founder

So to speak, Kafka came to life to overcome the problem with continuous streams of data, as there was no solution at that moment that would handle such data flow.

By definition Apache Kafka is a distributed streaming platform for building real-time data pipelines and real-time streaming applications. How does it work? Like a publish-subscribe system that can deliver in-order, persistent messages in a scalable way.

Visit Apache Kafka on GitHub

Being a Confluent Partner, at SoftwareMill we rely on Kafka in various commercial projects and it proved to be a reliable tool for data streaming or producing centralized feeds of operational data. Here we compared it to a similar tool: Apache Kafka vs Apache Pulsar.

How does Apache Kafka work?

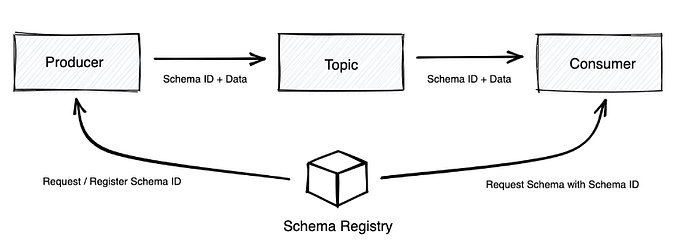

Kafka allows you to send messages between applications in distributed systems. The sender can send messages to Kafka, while the recipient gets messages from the stream published by Kafka.

Messages are grouped into topics — a primary Kafka’s abstraction. The sender (producer) sends messages on a specific topic. The recipient (consumer) receives all messages on a specific topic from many senders. Any message from a given topic sent by any sender will go to every recipient who is listening to that topic.

Kafka is run as a cluster comprised of one or more servers each of which is called a broker and communication between the clients and the servers is done with a simple, high-performance, language agnostic TCP protocol.

Get “Start with Apache Kafka eBook” — lessons learned while consulting clients and using Kafka in commercial projects.

Why Kafka gained momentum?

Usage of Kafka continues to grow across multiple industry segments. The technology fits perfectly into the dynamically changing data-driven markets where huge volumes of generated records need to be processed as they occur.

An immense volume of information arriving at high velocity and high costs of data storage push executives to look for cost-effective, resilient and scalable real-time data processing solutions. Why? It’s all about data…and the customer.

Data is the lifeblood for the modern enterprise. Companies want to discover trends, apply insights, react to customer needs in real time. Automation and predictive analytics are not reserved for unicorns anymore and those who respond quickly to market possibilities gain the advantage over the less data-driven competitors.

Looking for quality tech content about Big Data or Stream Processing? Join Data Times, a monthly dose of tech news curated by SoftwareMill’s engineers.

What are the business benefits of Apache Kafka?

At the moment Kafka is the second most active and visited Apache project used by over 100 000 organizations globally.

Like other messaging systems, Kafka facilitates the asynchronous data exchange between processes, applications and servers, and is capable of processing up to trillions events per day. Every real time big data solution can benefit from its specialized system in order to achieve the desired performance. Kafka helps you ingest and quickly move large amounts of data in a reliable way and is a very flexible tool for communication between loosely connected elements of IT systems.

React to customers in real-time

Apache Kafka uses Kafka Streams, a client library for building applications and microservices. It is a big data technology that enables you to process data in motion and quickly determine what is working, what is not. Now the typical source of data — transactional data such as orders, inventory, and shopping carts — is enriched with other data sources: recommendations, social media interactions, search queries. Adopting the process of analyzing streaming data in real time enables a significant reduction of time between when an event is recorded and when the system and data application reacts to it, so more and more companies can move towards more realtime processing like this. All of these data can feed analytics engines and help companies win customers.

Use the right data

Removing legacy systems and data siloses enables decision makers to transform their data into actionable insights reaching and informing every function of business. With stream processing the state of ever-changing data streams from different sources can be easily detected, maintained and act upon just in time.

Scale and automate

The main selling point of using Apache Kafka solutions is their scalability. The distributed systems are easier to expand and operate as a service. The shift towards event driven microservice architectures enables applications agility, not only from a development and devops point of view but especially a business perspective.

The IT industry is already there and ready for the mass market. It’s no longer only stock exchanges, who can and must afford such systems, where trades and other transactions have to be executed with millisecond latency. Cloud providers offer the whole stack, covering mature tools for orchestrating, scaling, monitoring and tracing — all in an automated fashion.

Read about digital transformation with stream processing.

What are the technological benefits of Apache Kafka?

Software developers and architects love Apache Kafka because it comes with multiple softwares that make it a highly attractive option for data integration. It is becoming the clear choice to handle distributing processing as it offers the whole ecosystem of tools for handling the flow of data in timely and fast manner.

Kafka is designed as a distributed system and can store high volume of data on commodity hardware. By also being a multi-subscription system, it enables published data set to be consumed multiple times. No surprise it is one of the most popular tools for microservices architecture. It serves as an intermediate communication layer and decouples modules from each other. Scalability is achieved by splitting a communication channel, called topic, into multiple partitions. This allows to start new instances of a particular module up to the number of partitions.

Microservices are the new black. Are you sure you’re using microservices?

Kafka has a very active community (Confluent and contributors). The versions are regularly updated, APIs change, new features are often added. Co-founders of Kafka were all LinkedIn employees when they started the project and Kafka originated as an open source project under the Apache Software Foundation in 2011. Back then, Kafka was ingesting more than 1 billion events a day. Recently, LinkedIn has reported ingestion rates of 7 trillion messages a day.

Expanding data sets with Kafka is easier as it scales better than other messaging systems. One of the reasons for it is that Kafka only guarantees order per-partition. The way you scale Kafka is by adding more partitions; messages from each partition can then be processed in parallel.

For some tests on scaling, see our message queue benchmark.

Finally, software developers operate in ecosystems of services which together work towards some higher level business goal. It’s beneficial to make these systems event-driven and Kafka is a great tool for that.

Find out more about Event sourcing using Kafka

Apache Kafka use cases

Major companies are using Kafka in a wide range of use cases. Apache Kafka can come handy when building distributed systems and producing operational monitoring data, tracking user activity in real time, and even implementing event sourcing.

Website activity tracking

With Kafka we can build user activity tracking pipeline as a set of real-time publish-subscribe feeds. Website activity (page views, searches, or other actions users may take) is published to central topics and becomes available for real-time processing, dashboards and offline analytics in data warehouses like Google’s BigQuery.

Metrics

Kafka is often used for operation monitoring data pipelines and enables alerting and reporting on operational metrics. It aggregates statistics from distributed applications and produces centralized feeds of operational data.

Log aggregation

Kafka can be used across an organization to collect logs from multiple services and make them available in standard format to multiple consumers. It provides lower-latency processing and easier support for multiple data sources and distributed data consumption.

Stream processing

A framework such as Spark Streaming reads data from a topic, processes it and writes processed data to a new topic where it becomes available for users and applications. Kafka’s strong durability is also very useful in the context of stream processing.

Event sourcing

While Kafka wasn’t originally designed with event sourcing in mind, it’s design as a data streaming engine with replicated topics, partitioning, state stores and streaming APIs is very flexible. Hence, it’s possible to implement an event sourcing system on top of Kafka without much effort.

Companies that leverage Apache Kafka

Kafka is used heavily in the big data space as a reliable way to ingest and move large amounts of data very quickly.

According to stackshare there are 741 companies that use Kafka. Among them Uber, Netflix, Activision, Spotify, Slack, Pinterest, Coursera and of course Linkendin. Wanna find out more about some of the use cases?

One more fun-fact for those who were still wondering. Jay Kreps is a fan of Franz Kafka but besides this, the name of the streaming platform has nothing more to do with the famous author :)

Should I use Apache Kafka?

Kafka is a messaging system that lets you publish and subscribe to streams of messages. In this way, it is similar to products like ActiveMQ, RabbitMQ, IBM’s MQSeries, and other products. But it is not just a message broker.

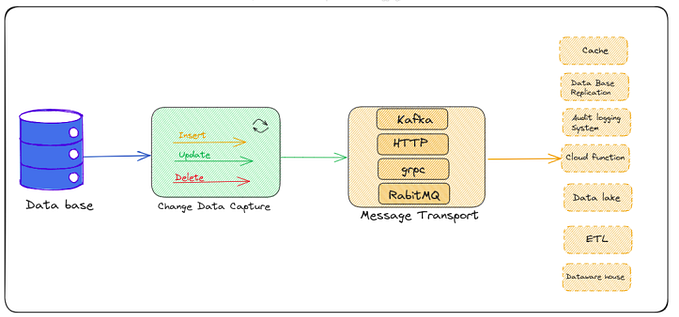

In your organization you migh have various data pipelines that facilitate communication between various services. When plenty of services communicate with each other in real time, their architecture becomes complex, because they need many integrations and various protocols. Apache Kafka can help you decouple such data pipelines and simplify software architecture to make it manageable.

Apache Kafka Implementation Checklist

- Does your business processes require message ordering?

- Do you want to be able to configure retention of the data?

- Do you want to be able to scale number of consumers almost linearly? Or maybe you want to have multiple processes reading the same data independently?

- Does it make sense to have multiple consumers consuming the same data?

- Do you want to integrate message broker with other services, e.g. databases? Or maybe you need a fully fledged stream processing solution?

If you want more Kafka related insights, here is a list of good resources for learning about Apache Kafka, enjoy!

Need help with Apache Kafka?

We are a Certified Technical Partner of Confluent. Our engineering expertise with stream processing and distributed systems applications is proven in commercial projects, workshops and consulting. Let’s talk.